https://www.viribusmusic.com/shop/two-blue-circles-for-classical-guitar

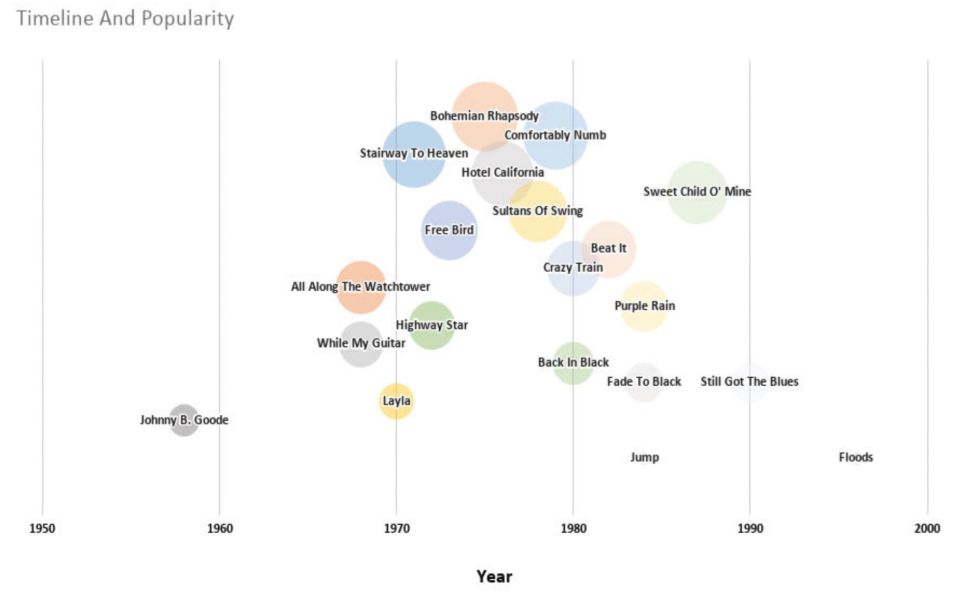

Originally commissioned for Total Guitar, this examination of the reader-voted top 50 guitar solos is now publicly available on Guitar World.

Engaging directly with time-feel and micro-timing on the electric guitar with specific exercises and style studies. This is for many levels of player who wants to draw focus to the elements of groove which are often excluded, glossed over or mythologized in the learning process.

A live video presentation at the fantastic 21st Century Guitar Conference “in” Lisbon, March 2021, hosted by the wonderful Amy Brandon and Rita Torres. ‘Digital Self-Sabotage’ explores we guitarists’ deep and twisted engagement with the fretboard, and how technology can expand and disrupt this bond for learning and insight.

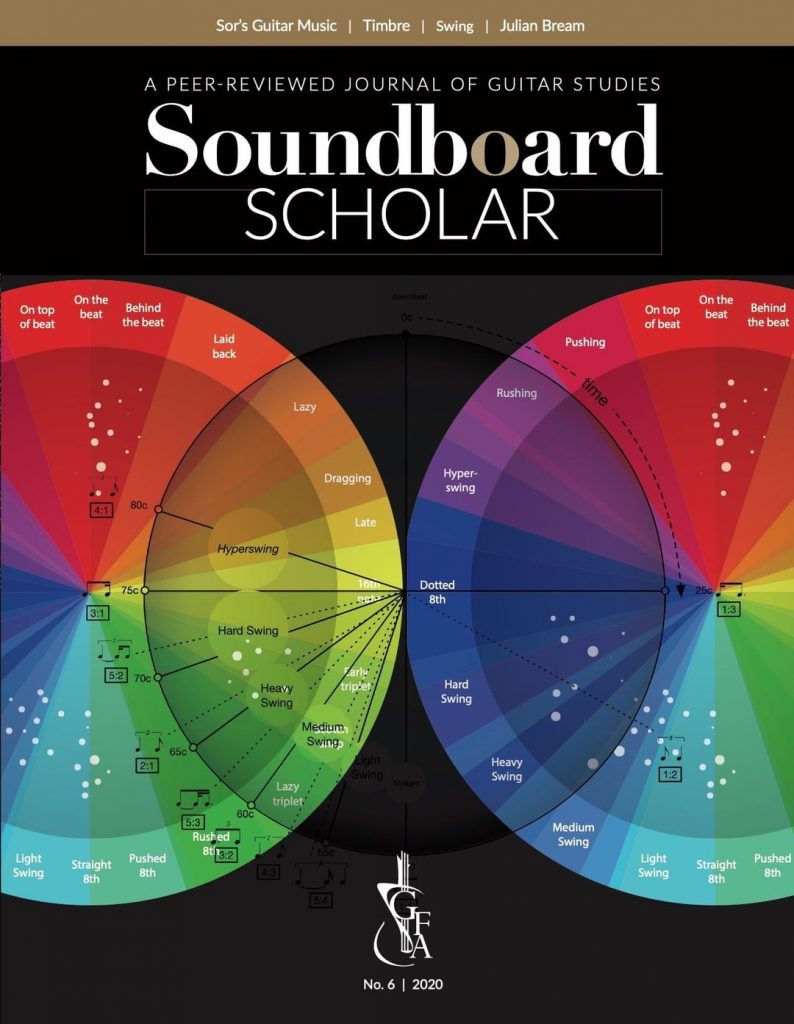

Soundboard Scholar (No.6) features my paper. “Monitored Freedom: Swing Rhythm in the Jazz Arrangements of Roland Dyens” examines the time-feel in the performances and scores of Roland Dyens, in particular reference to his arrangement of Nuages – and Django’s performances of this piece. Working with the genius Jonathan Leathwood is always a privilege and joy, and I am very grateful that my illustration is used as the cover image to the journal. Available here.

CLP was featured in the Metro newspaper tech section on Friday 13th November 2020. More on the project:

A collaboration with Dr Enzo De Sena, the mutations of the Covid-19 virus over 500 generations are sonified. Every motif is formed by the mutation of successive Covid-19 strains. Although ‘musical’ decisions are made, they are done so not to cloak the data with familiar emotional signs, but to reveal the hidden music of the mutations – as such motivic similarity and variation are the shared language of music and biology. Despite the remarkable amount of change it should be appreciated that Covid-19 is in fact relatively stable, so the hope for an effective vaccine remains.

– Milton

This project tries to sonify the genetic mutations of COVID-19 as they are observed over time. The main aim of the project is to satisfy a personal curiosity and for artistic purposes. However, it is hoped that sonifying the mutations could highlight patterns that would not be picked up otherwise.

The current sonification methodology associates notes’ timing to the position of the mutation within the DNA and pitch to the type of nucleotide mutation (e.g. G->A, or C->T etc). This means that the position of the mutation results in different rhythmic placement, and the type of nucleotide mutation results in different melodies.

– Enzo

To follow the project visit: miltonline.com/covid-sonify

The Careful project has expanded and developed to now support student nurses during the pandemic. The project has been disseminated by The Stage and Medical News Sites.

“A set of drama-based resources to help Kingston University, London nursing students working during the Covid-19 pandemic have been put together through a collaboration with world-leading performing arts school Guildhall School of Music & Drama, London.”

https://www.news-medical.net/news/20200621/Drama-based-resources-help-Kingston-University-nursing-students-to-cope-with-coronavirus-pandemic.aspx

https://www.thestage.co.uk/news/coronavirus-guildhall-creates-online-drama-resources-for-nursing-students

The genome is formed by a long sequence of 4 types of nucleotides: adenine (A), guanine (G), cytosine (c), and uracil (U). This sequence is used to synthesise specific proteins such as the “Spike protein”, which gives COVID-19 its crown-like appearance. A mutation happens when a nucleotide is changed, inserted or deleted in the sequence.

The current sonification methodology associates notes’ timing to the position of the mutation within the DNA and pitch to the type of nucleotide mutation (e.g. adenine to guanine). This means that the position of the mutation results in different rhythmic placement, and the type of nucleotide mutation results in different melodies, giving each genome its own musical signature. Furthemore, repeating musical patterns mean that a mutation has persisted over time.

Note that although it may seem like the number of mutations is large, COVID-19 is actually considered a relatively stable virus.

The project uses the database of the National Center for Biotechnology Information (NCBI), which is being updated every day with new COVID-19 sequences coming from research centres across the world. The website is available here: NCBI Covid-19 page

The data is parsed and downloaded using the covid-genome-matlab-parser.

The first step is to obtain the mutations are obtained from the NCBI dataset:

The second step is to translate the mutations into music. Below are two examples of how to do this.

Below are the details of the procedure to generate the sound from the mutations:

- sign indicates an insertion or a deletion; all other types of mutations are ignored.| Old basis | New basis | Midi note | Note |

|---|---|---|---|

| C | – | 47 | B2 |

| U | – | 48 | C3 |

| A | – | 49 | C♯3/D♭3 |

| G | A | 51 | D♯3/E♭3 |

| G | U | 52 | E3 |

| G | C | 53 | F3 |

| A | G | 54 | F♯3/G♭3 |

| A | U | 55 | G3 |

| A | C | 56 | G♯3/A♭3 |

| U | G | 57 | A3 |

| U | A | 58 | A♯3/B♭3 |

| U | C | 59 | B3 |

| C | G | 60 | C4 |

| C | A | 61 | C♯4/D♭4 |

| C | U | 62 | D4 |

| – | G | 63 | D♯4/E♭4 |

| – | A | 64 | E4 |

| – | U | 65 | F4 |

| – | C | 66 | F♯4/G♭4 |

| G | – | 50 | D3 |

| Protein name | Group | Instrument | Angle |

|---|---|---|---|

| NSP1 | 1 | Cello | -45° |

| NSP2 | 1 | Cello | -45° |

| NSP3 | 2 | Cello | -30° |

| NSP4 | 3 | Cello | -15° |

| NSP5 | 3 | Cello | -15° |

| NSP6 | 3 | Cello | -15° |

| NSP7 | 3 | Cello | -15° |

| NSP8 | 3 | Cello | -15° |

| NSP9 | 4 | Cello | 0° |

| NSP10 | 4 | Cello | 0° |

| NSP12 | 4 | Cello | 0° |

| NSP13 | 5 | Cello | +15° |

| NSP14 | 5 | Cello | +15° |

| NSP15 | 5 | Cello | +15° |

| NSP16 | 5 | Cello | +15° |

| S | 6 | Double base | +30° |

| ORF3a | 7 | Violin | +45° |

| E | 7 | Violin | +45° |

| M | 7 | Violin | +45° |

| ORF6 | 7 | Violin | +45° |

| ORF7a | 7 | Violin | +45° |

| ORF8 | 7 | Violin | +45° |

| N | 7 | Violin | +45° |

| ORF10 | 7 | Violin | +45° |

| Non-coding DNA | 8 | Violin | +45° |

Here, over 500 genome sequences are translated into two octaves of a B minor scale. The translations are selected by mapping the most common mutations types (‘note deltas’ above) into the most common diatonic scale degrees on a sample of Western Art Music (see Huron 2008)[6]. This results in familiar melodic motifs for the most commonly retained mutations and pandiatonic blurring for the more novel mutations. At the tempo selected this results in a surprisingly engaging piece of music lasting over 42 minutes, where the language of mutation is translated into the language motivic transformation, a deeper sonificaiton beyond arbitrary chormatic or ‘safe’ scale choices.This is performable by choir and organ nut its here rendered with MIDI instrumentations in Ableton Live with UAD and Native Instrument plugins.

Please keep the coding convention.

We are particularly interested in collaborations with Molecular Biologists. Contact enzodesena AT gmail DOT com if interested.

We are both with the Department of Music and Media, University of Surrey, Guildford, UK University of Surrey DMM Website.

We would like to thank Gemma Bruno (Telethon Institute of Genetics and Medicine, Italy) and Niki Loverdu (KU Leuven, Belgium) for the useful discussions on topic. Also, we would like to thank our friends and colleagues for the useful feedback on the presentation.

The project also uses internally:

[1] Durbin, R., Eddy, S., Krogh, A., and Mitchison, G. (1998). Biological Sequence Analysis (Cambridge University Press).

[2] H. Hacıhabiboğlu, E. De Sena, Z. Cvetković, J.D. Johnston and J. O. Smith III, “Perceptual Spatial Audio Recording, Simulation, and Rendering,” IEEE Signal Processing Magazine vol. 34, no. 3, pp. 36-54, May 2017.

[3] E. De Sena, Z. Cvetković, H. Hacıhabiboğlu, M. Moonen, and T. van Waterschoot, “Localization Uncertainty in Time-Amplitude Stereophonic Reproduction,” IEEE/ACM Trans. Audio, Speech and Language Process. (in press).

[4] E. De Sena, H. Hacıhabiboğlu, and Z. Cvetković, “Analysis and Design of Multichannel Systems for Perceptual Sound Field Reconstruction,” IEEE Trans. on Audio, Speech and Language Process., vol. 21 , no. 8, pp 1653-1665, Aug. 2013.

[5] E. De Sena, H. Hacıhabiboğlu, Z. Cvetković, and J. O. Smith III “Efficient Synthesis of Room Acoustics via Scattering Delay Networks,” IEEE/ACM Trans. Audio, Speech and Language Process., vol. 23, no. 9, pp 1478 – 1492, Sept. 2015.

[6] Huron, D. (2008) Sweet Anticipation: Music and the Psychology of Expectation. MIT Press

[7] https://www.nytimes.com/interactive/2020/04/03/science/coronavirus-genome-bad-news-wrapped-in-protein.html (Accessed on: 8/3/2020)

This project is licensed under the GNU License. The code will be made available soon. In the meantime, contact enzodesena AT gmail DOT com if interested.

You use the code, data and findings here at your own risk. See the LICENSE.md file for details.

A pleasure to take part in this BBC Scotland documentary on sleep, presented by Ian Hamilton and produced by Laura Kingwell. Ian, who is blind has a particualr interest in sleep disorders and the work of Prof Debra Skene, a key collaborator in Sound Asleep. First broadcast 29/3/20 on Good Morning Scotland on BBC Radio Scotland.

A cute little Arvo Part machine, for creating tintinnabuli voices (Demo, background, tutorial and free download below)

Latest version available on Max4Live.com or Download here if you want v1.3 in a live set, free to use, but please leave a comment, rate kindly and credit Milton Mermikides (miltonline.com). Do let me know here about any published or exciting work you are doing with it 🙂

British Psychosocial Oncology Conference Feb 27-28 2020

Accessing patients’ perspectives of acute lymphoblastic leukaemia (ALL) through the arts

Hosted by Dr Alex and Dr Milton Mermikides

Milton is Reader in Music at University of Surrey. Alex is Doctoral Programme Leader at the Guildhall School of Music & Drama

Bloodlines is dance-lecture performance depicting a patient’s experiences of ALL and its treatment through stem cell transplant. Here, it’s makers, Milton (a composer and ALL survivor) and Alex

(theatre-maker and his sibling bone marrow donor) share extracts of the work and reveal some of techniques they used to turn their personal experience, and their medical data, into an artwork.

Bloodlines was first performed at the Science Museum, London and has since been seen by medical students and professionals, patient groups and general audiences. It has featured in the Times Higher Education and on Midweek (BBC Radio 4). Its development was funded by the Arts and Humanities Research Council.

https://www.bpos.org

Lecture and Workshop for Ableton at Glasgow’s Question Session in the beautiful Lighthouse venue. Make music from anything.

Feb 8 2020 Free Entry – For all Info: https://www.questionsession.co.uk

For centuries. composers have been reaching out beyond the musical world into nature, science and other disciplines for inspiration. Pythagoras conceived of a harmony created by the orbiting planets (The Music of the Spheres), Newton attached 7 colours of the rainbow to the notes of a scale, composers like J.S. Bach encoded their names into musical motifs, and Villa Lobos wrote melodies tracing the New York skyline. This workshop enables musicians of all styles to tap into this vast and profound craft of ‘data-music’. This long-established but niche craft has now been given a profound renaissance with contemporary technology: Ableton Live with bespoke Max for Live devices (available to participants in the workshop and distributed online) allow a world of real-time music creativity beyond the limits of human imagination. We will demonstrate such techniques as the automatic translation of your name into melodies, works of art into rhythms, spider webs into virtual harps, live weather reports into MIDI controls and countless other possible translations. This approach provides a uniqueness and profound meaning to your music-making whatever your stylistic interest, allowing you to tap into the infinite and uncharted universe of musical creativity.

Pleasing to see my ‘postcard’ analyses of Arvo Pärt’s Beatitudes and Spiegel Im Spiegel published in Andrew Shenton’s fine new work Arvo Pärt’s Resonant Texts (Cambridge University Press).

Here they are without explanation.

I’ll be keynote speaker-erm-ing at the Royal Asociety of Medicine on Tuesday 4th September at the Royal Society of Medicine, London.

More details here and at the link above:

Join us as we explore the links between sleep, sleep disorders and all forms of art, literature, and music including modern digital media.

We will look at the effect of music in the sleeping brain, the portrayal of sleep and sleep disorders within works of art, the perils and pleasures of sleep apps and their effect on the public perception of sleep and the literature of dreams.

You will learn to:

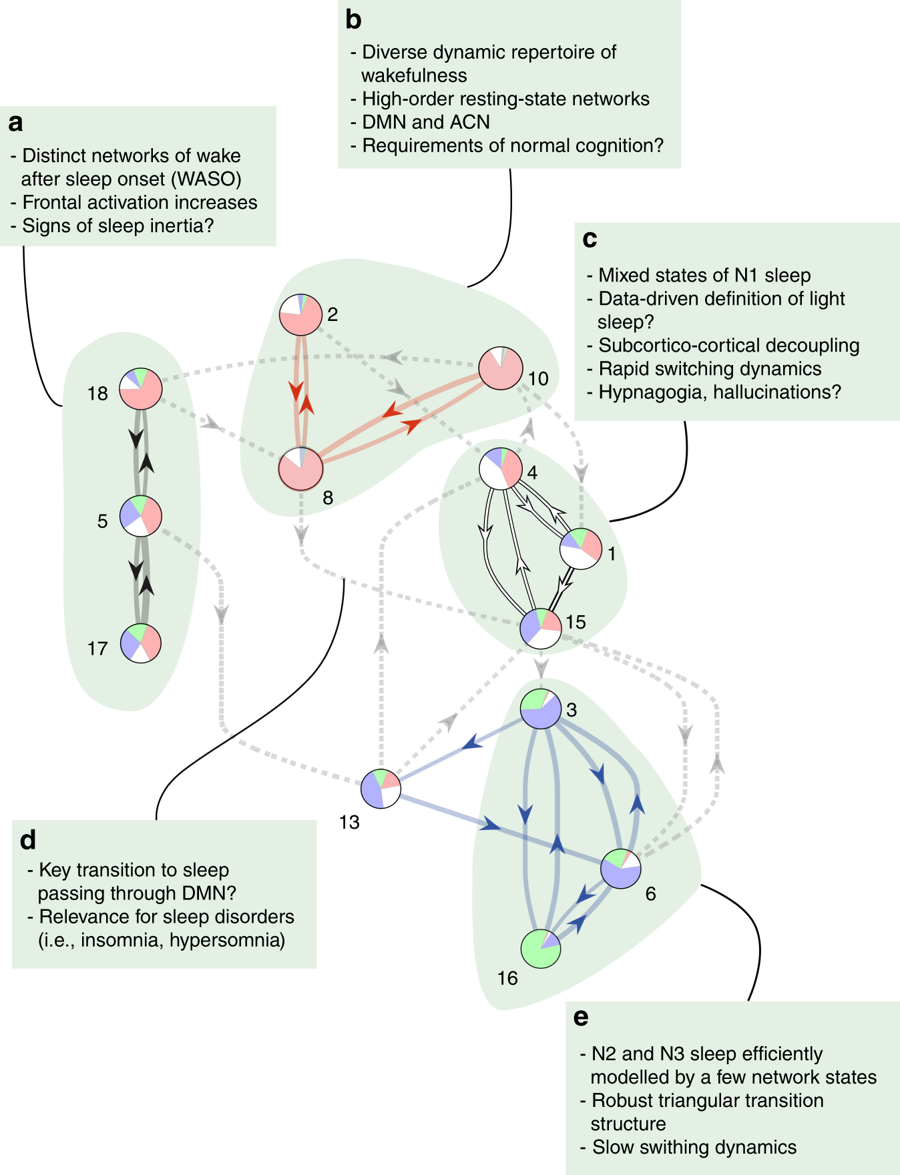

Sound Asleep was filmed by Jake Davison, Kate Wallace, Josephine Hannon and Megan Brown. They interviewed Professor Morten Kringelbach, a neuroscientist at the University of Oxford, and Professor Milton Mermikides, a composer guitarist and music theorist.

Jake explains: “The film is about the discovery of newly described changes in brain activity during sleep by Professor Kringelbach and his team, and how conversion of these findings to music could provide a useful diagnostic tool and possibly a therapeutic for sleep disorder treatment. We decided to cover this story because, in the age of smartphones, tablets and a 24/7 world, the quality of our sleep is decreasing and its importance is often overlooked. This new model of sleep brain activity developed by Professor Kringelbach and his team, as well as his collaboration with Professor Milton Mermikides to produce musical compositions from this data, will help us understand the mechanism of sleep better and therefore allow us to improve our own sleep. Both the ground-breaking nature of this research and the unorthodox method of utilising music to potentially unlock more discoveries seemed intriguing to us and something that needed to be heard about

The keynote presentation ‘Plucked from Thin Air’ on improvisation and the guitar, was presented as part of the International Guitar Research Centre’s 2019 Conference at the Hong Kong Academy of the Performing Arts on Tuesday 16th July 2019 to an international cohort of guitar scholars and practitioners. Borges, Coltrane and m-finity.

A collaboration with Professor Morten Kringelbach, producing music based on his team’s research into the transitional networks involved during sleep is featured on BBC Radio 4’s weekly science podcase Inside Science and broadcast 28/3/19 at 16:30 and 21:00. Catch up here to listen And here for the wonderful research paper “Discovery of key whole-brain transitions and dynamics during human wakefulness and non-REM sleep” (Nature Comms)

How fast was the petition to revoke Article 50 signed in the 24 hours surrounding Theresa May’s speech on March 20th 2019?

Well if a note was played on the piano every time the petition was signed it would sound (and look) like this.

Ingredients:

Ableton Live 10 + Max/MSP + Excel + UAD Apollo + Adam Audio S2V + NI S88 Keyboard with Light Guide + NI Komplete + Lava Lamp

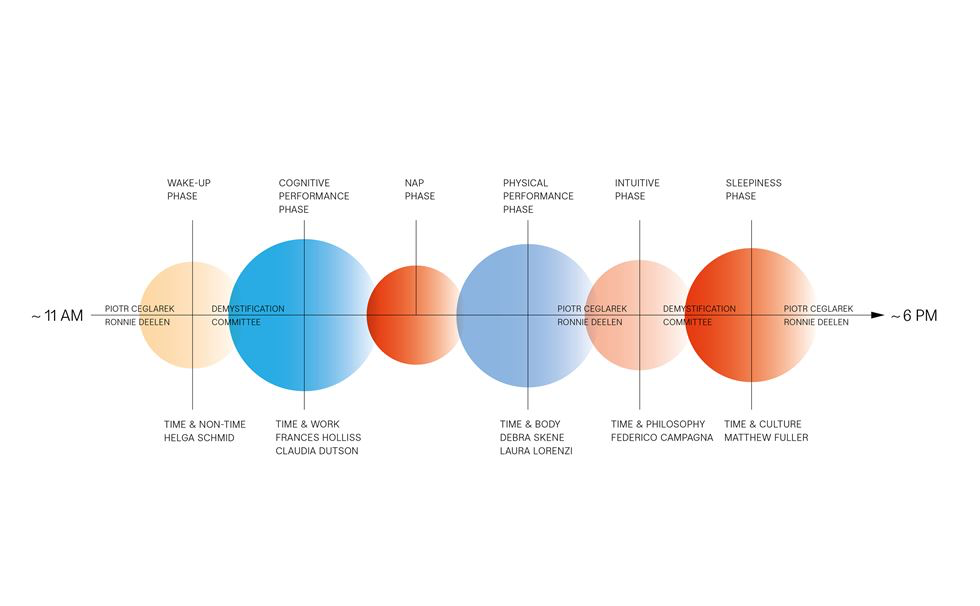

23 February 2019, The Sound Asleep project was presented by Prof Debra Skene at the Designing Time even at the Design Museum, London:

A day of talks and live performances exploring the design of alternative time systems, based on daily bodily rhythms (known as circadian rhythm).

Currently, ‘clock time’ structures and directs human behaviour. But are there alternative time systems that are better for our health, happiness and overall productivity? Rather than being guided by the clock, the installation investigates new time systems and ways of living.

A series of participatory events and activities over the course of the weekend will test and challenge the structures and rhythms of contemporary life. On Saturday evening there will be a public talk by scientists, artists and designers on the design of time and the nature of temporality.

A lovely discussion with BBC Studio Manager (and Radiophonic scholar) Jo Langton and presenter Tom Service on Radio 3’s Hidden Voices series on Music Matters. Kathleen Schlesinger’s The Greek Aulos, Ancient Greek Modes, microtonality, the work of Elsie Hamilton and its legacy today.

Book for the free December 5th 2018 6pm event at the Barbican here:

Hear what the neuroscience of falling asleep sounds like! Join composer and guitarist Milton Mermikides and Oxford Professor Morten Kringelbach – an expert in the neuroscience of pleasure – as they explore the musical qualities of sleep. This exciting dialogue will cover the science of sleep and its parallels with musical composition.

Kringelbach will discuss the neuroscience of music and why it is one of the strongest and most universal sources of human pleasure. Mermikides believes everything we do is music, and that music exists from the galaxies down to subatomic particles.

Together they will look at the neuroscience of human sleep and how harmonic patterns in our sleep cycle can be used to create musical compositions reflecting sleep during both health and disease. You will hear both what good and disrupted sleep patterns sound like. You’ll also find out how our body clock differs from the 24-hour clock and how this impacts our natural sleep cycle.

Kringelbach will present his new research identifying the neural pathways for how we fall asleep. Building on this, Mermikides will present new music he has composed based on Kringelbach’s discoveries. For the first time, you will hear what the neuroscience of falling asleep sounds like.

Sound Asleep is a public lecture open to everyone. It’s part of The Physiological Society’s Sleep and Circadian Rhythms meeting taking place at the Barbican between 5-6 December 2018.